Testing includes a group of fixed terms that are however used quite inconsistently in everyday life. We commonly talk only about bugs (the history of the term and how is ended up meaning also a software bug can be read from for example in Wikipedia). In practice a bug means one of the following:

Error is any deviation in program behaviour from its specification. One common cause of expensive errors is the gap between requirements: something is not properly specified.

Fault or defect is caused by the execution of erroneous code or when some unimplemented functionality should be executed. A fault cannot necessarily be seen in the behaviour of the system. All faults are not necessarily caused by programming errors either. Instead they can be caused by lacking requirements or deviations in the hardware.

Failure is an externally observable event in program functionality, due to a fault. All faults do not lead to a failure and the other way around one fault can cause several failures in different parts of the software.

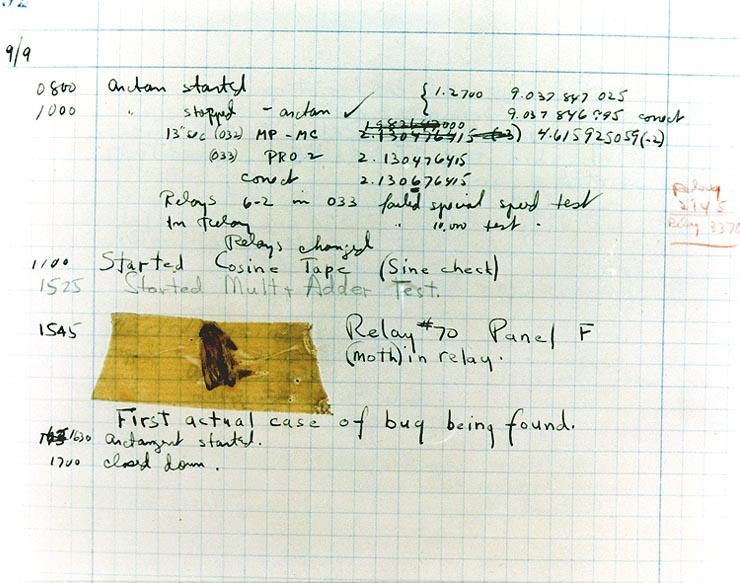

picture of the so called forst software bug, Naval Surface Warfare Center, Dahlgren, VA., 1988. - U.S. Naval Historical Center Online Library. Public Domain.¶

The specification of the program tells the requirements set for the behaviour of the software i.e. how the prorgam should and should not work.

Ways to Test¶

Testing software can be implemented in very many different ways. Testing can also be done in all stages of software development. The aim is always finding errors in the code as early as possible. This emphasizes the key role of testing as a part of implementing the software: it is done in parallel with functional development work. Testing can be among other:

Dynamic testing: executing the program with a suitable set of input

Static testing: trying to find errors in the code by inspecting the source code and/or documentation or by using static analysis tools

Positive testing: using “happy case” tests to try to ensure that the program does what it should do. A minority of tests are happy case tests.

Negative testing: “unhappy case” tests, i.e. cases which are not described in the specification either properly or at all, erroneous cases and other such

cases where there is no specified behaviour for the software. * Exploratory testing: instead of planning predefined test cases the testing goes forward with testing freely creating new test cases based on the results of the tests on the fly.

Testing can also be grouped based on the part or stage of the software tested:

Unti testint: automatically executed tests that test the functionality of a single method or module in the program.

Integration testing: tests executed during implementation to test that the components call eachother correctly.

System testing: tesing the final, complete software to make sure it behaves according to the specificaion.

Acceptance testing: testing is the delivered product matches what was ordered.

Regression testing: tesing code that has already passed its tests to make sure newly added code did not break anything.

A Good Test¶

Coming up with good test cases is very often difficult. It is especially hard for the developers. At the same time sufficient information on the quality of the software can be received only through good tests covering the code well. Bad test cases can paint a too positive picture about the quality of the program: the program can seem to work as it should only, because the test cases have been selected poorly.

A goos test case is a small test for program functionality:

What to test? e.g. division operator of a calculator

What is a good input? e.g. division by zero

What is the expected result? e.g. an error message, something else than program crash

Test cases can be designed either before their execution or “on the fly”.

Each test cases consists of:

Setting the test case up: initializing variables, putting the system in the state needed to run the test

Execution of the tes case: running the testcode and capture all output

Evaluation of the result: investigating what was the end result of the test by comparing the results to the expected results and indicating it as clearly as possible

Clean-up: restore the system to the pre-test state by cleaning up after the test and freeing allocated resources

Expecially unit testing is the responsibility of the programmer. As continuous development has become common every software developer must also test their own code.

Software testing (duration 15:57)